A brief run-down of Solana’s high-performance permissionless network design

A high-performance permissionless network — is that even possible?

In a permissionless network anyone on the Internet can download the software, join the network and participate in any of its functions. For us that means that any node can be a leader or validator.

Here are some of the challenges:

- We are on the open Internet and a node can be DDOSed. This topic deserves its own article and will be covered in the future.

- Signature verification is our only method for authenticating messages. We cannot trust IP addresses.

- Only when a dishonest node signs an invalid message can we punish them by presenting that message to the rest of the network.

- Only when 2/3rds of the network recognize that the signed message was dishonest will punishment take effect.

- We can only punish nodes by removing their tokens — and only those that belong to the public key identity of the signature.

- Individual observations about the network from external nodes cannot be trusted. We can only aggregate them and hope that the supermajority of the network is honest.

Message Flow in Solana

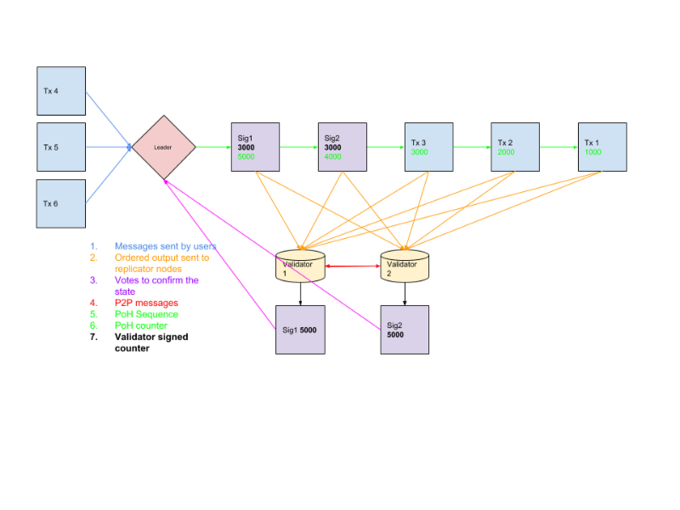

Figure 1. Message flow through the network.

As shown in Figure 1, client messages arrive to the current leader, the leader inserts them into a Proof of History (PoH) stream and broadcasts it out to the rest of the network, which act like validators. The challenge in this design is that the leader can ingest 1 gigabit worth of data and need to somehow produce 1 gigabit worth of output to multiple machines at the same time. It can’t broadcast the full set of data to all the machines because that would add up to more than 1 gigabit. Our approach is to split the bandwidth between downstream validators and have them re-assemble the stream back together.

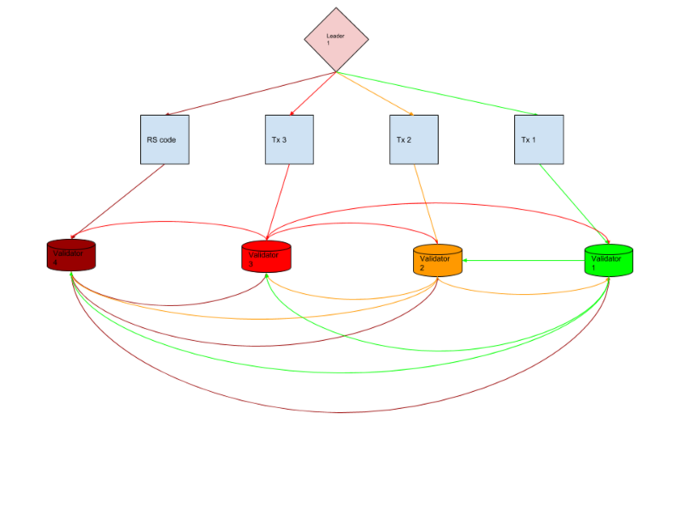

Figure 2. Avalanche packet flow.

Figure 2. shows how the leader splits the bandwidth between downstream nodes. The leader sends a packet up to 64kb in a round robin fashion to each validator in the first layer. That validator immediately broadcasts it to everyone else in the first layer of the network. This should pipeline the transmission of the second packet from the leader to validator 2 with the broadcast of the first block from validator 1 to the rest of the network.

Two kinds of packets are transmitted by the leader.

- Data packets, which is some PoH data to represent that real-time is passing with some transactions that are hashed into that PoH data.

- RS packets, which are Reed-Solomon codes that can be used to reconstruct the dataset if any of the packets are dropped.

RS Code packets take up some of the available bandwidth, so they reduce our throughput, but they allow each validator to repair the transmitted data structure if a few random packets are dropped.

The set of first layer validators should be the supermajority of nodes weighted by their stake size plus the number of nodes expected to fail at any given time. The number of extra RS Code packets and extra nodes in the first layer will give the network some room for dropped packets and hardware failures.

Check it out on Github

Here is the leaders broadcast loop, the validators retransmission loop, and the validators window loop.

Just like a TCP window, each validator may see packets arrive out of order and needs to reconstruct a window. If a validator is missing a packet in the window and is unable to repair it with Reed-Solomon codes, it can also asks the network randomly for any missing packets.

The window repair responses are sent to a different port than the general broadcast, so they can skip all the packets in the kernel’s queue for the broadcast UDP socket.

CRDTs

Most of the above functions are implemented inside the crdt module. CRDT are Conflict-free Replicated Data Types. When we speak of the network this is really what we mean. It’s a table of versioned structs called ReplicatedData. These structures are randomly propagated through the network, and the latest version is always picked.

The ReplicatedData structure contains information about the node, such as various port addresses, public key identity, current hash of the blockchain state. Each node in the network randomly asks nodes if they have any updates and applies these updates to their table. Eventually all these messages must be signed by their identities.

Randomly querying the network for updates is our very bare bones gossip network. It allows the nodes to route around hardware failures of any single node, as long as there is a path somewhere in the network to connect them. The leader needs to transmit enough RS Code packets to cover the churn in this table. There are tests for various topologies.

We can optimize how fast we converge, but it needs to be done with care, since any “rumors” about other nodes in the network could be faked.

— —

Want to learn more about Solana?

Check us out on Github and find ways to connect on our community page.